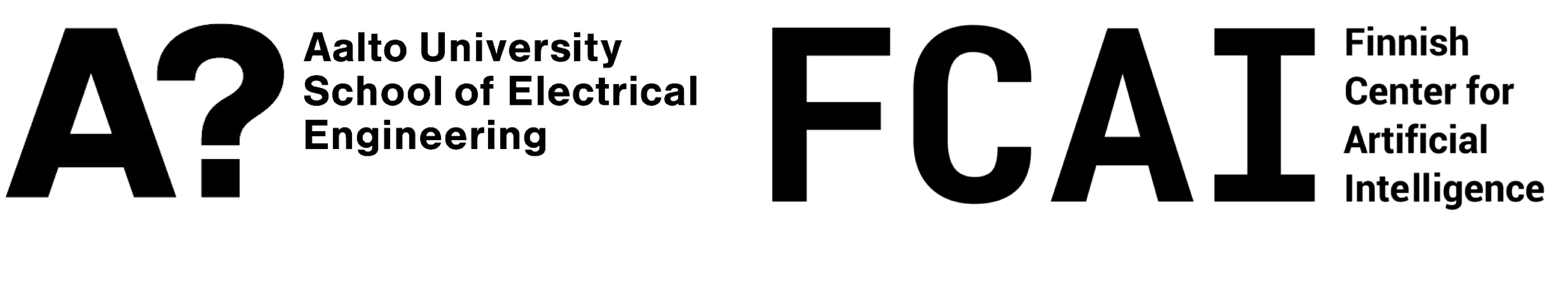

Understanding visual search in graphical user interfaces [Data]

Putkonen et al. examine how people visually search for items in graphical user interfaces using eye=tracking data. It shows that search behavior depends more on interface and cue type than visual complexity, and follows a common Gaussian-Confirm pattern.

No Evidence for LLMs Being Useful in Problem Reframing [Paper][Code&Data]

Shin et al. CHI’25: Reports a dataset and experimental analysis evaluating Large Language Models (LLMs) in creative problem reframing tasks. The study provides human-LLM comparison data and performance metrics showing no reliable benefit from current LLMs.

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2025.

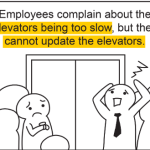

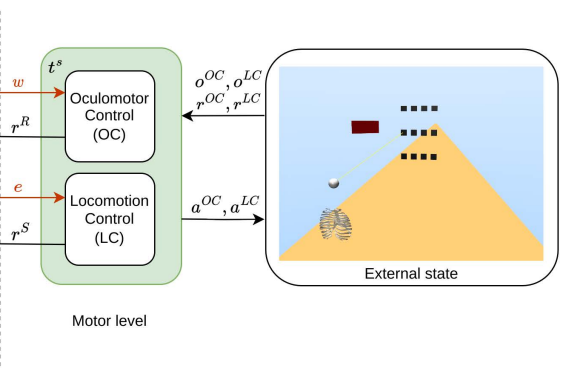

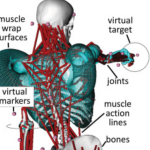

Real-time 3D Target Inference via Biomechanical Simulation [Paper][Code][Data]

Moon et al. CHI’24: Presents a biomechanical simulation model for real-time prediction of 3D movement targets. The model infers intended motion endpoints from partial kinematic data, improving interaction tracking in immersive systems.

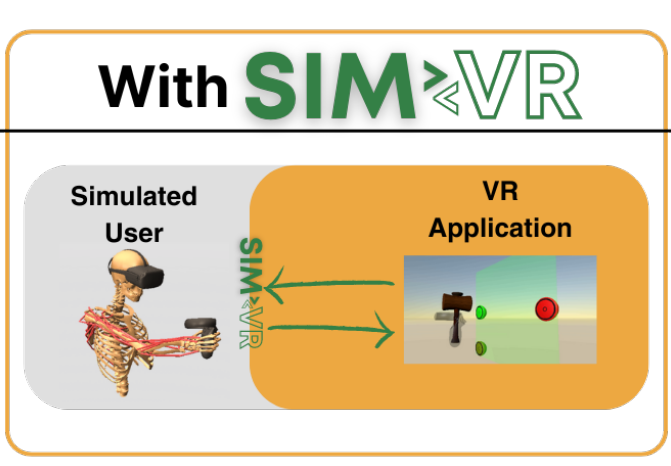

SIM2VR: Towards Automated Biomechanical Testing in VR [Code] [Data]

Fischer et al. UIST’24: Introduces a VR-based pipeline for automated biomechanical testing. The system simulates and evaluates user movement dynamics in virtual reality, using reinforcement learning and physics models to assess interaction ergonomics

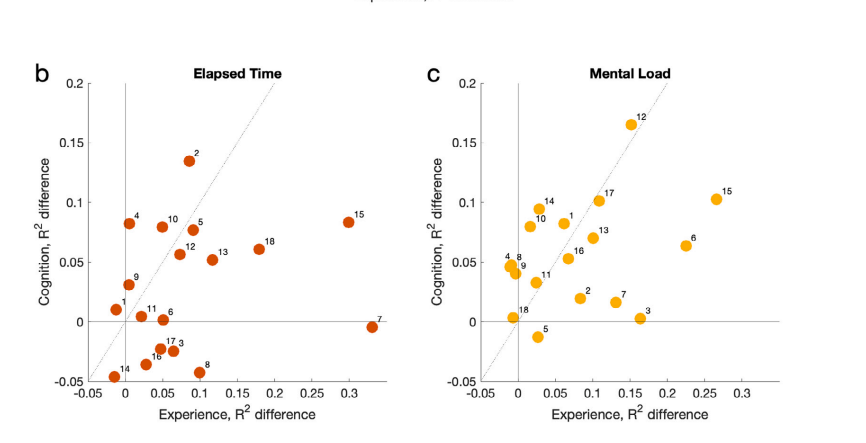

Cognitive abilities predict performance in everyday computer tasks [Data]

This paper shows that cognitive abilities, particularly working memory and executive control, strongly predict performances in everyday computer tasks, Higher cognitive skills lead to faster, more accurate task completion, independent of experience.

In: International Journal of Human-Computer Studies, 2024.

Heads-Up Multitasker: Simulating Attention Switching On Optical Head-Mounted Displays[Data]

Bai et al. introduce a model that simulates how users switch attention between tasks like reading and walking on optical head-mounted displays. It explains multi-tasking behavior as optimal attention allocation balancing task performance and safety.

In: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 2024.

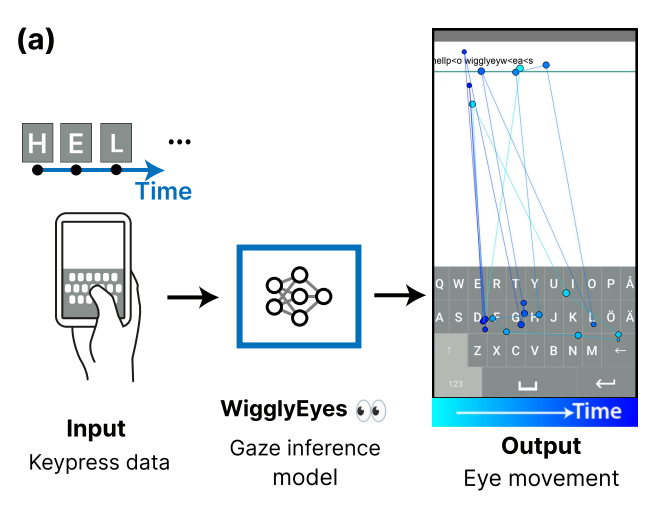

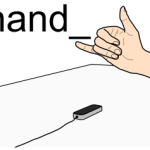

WigglyEyes: Inferring Eye Movements from Keypress Data [Code] [Data]

Zhu et al. 2024: Proposes a novel method to infer eye movement patterns from typing behavior using keypress timing and sequence data. Enables eye-tracking inference without cameras, bridging typing and visual attention analysis.

In: arXiv preprint arXiv:2412.15669, 2024.

UEyes: Eye-tracking dataset for GUIs (CHI’23) [Paper][Video]

Jiang et al. CHI’23, introduce Ueyes which provides a large-scale eye-tracking dataset capturing visual saliency across diverse user interfaces. It contains gaze data from 62 participants viewing 1,980 screenshots from web, mobile, desktop, and poster interfaces, recorded with a GP3 HD eye tracker. The dataset offers raw gaze-log CSVs, fixation maps & scanpaths, saliency heatmaps of multiple durations, screenshot metadata, and UI-type label

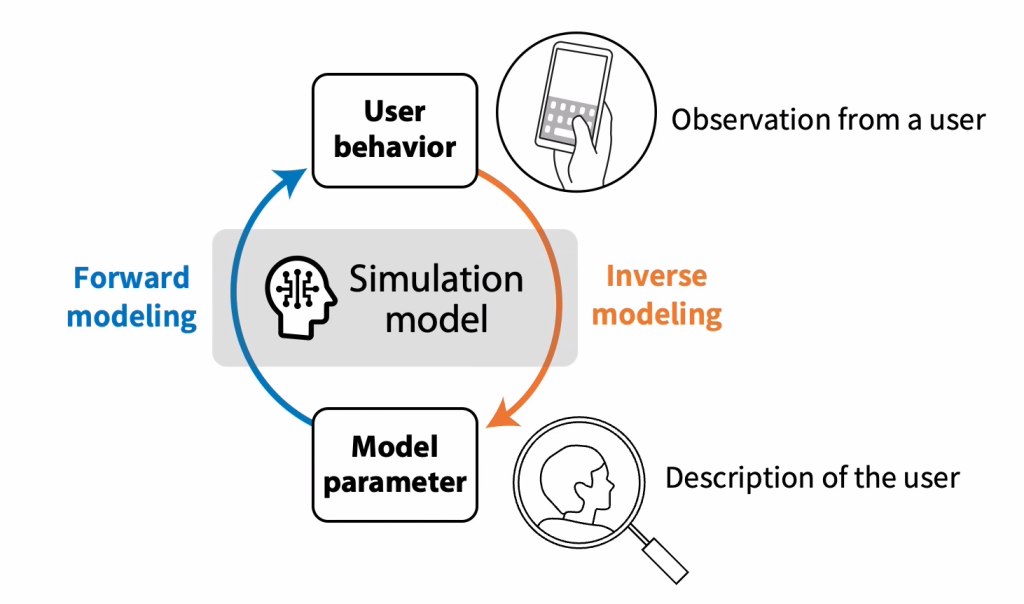

Amortized inference with user simulations (CHI’23) [Paper][Video][Data]

Moon et al. CHI’23: Amortized Inference with User Simulations speeds up parameter estimation in simulation-based user models. Synthetic data sampled from model parameters train a neural density estimator that approximates the posterior over parameters from observations, enabling faster and scalable inference.

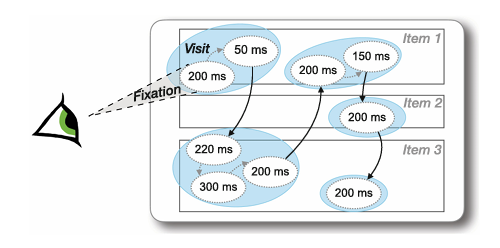

Fragmented Visual Attention in Web Browsing: Weibull Analysis of Item Visit Times [Paper][Code&Data]

Putkonen et al. ECIR’23: Presents a dataset and statistical analysis of eye-tracking data from web browsing tasks. Using Weibull distribution fitting, the study quantifies fragmented visual attention patterns, offering open data and code for attention modeling in online reading.

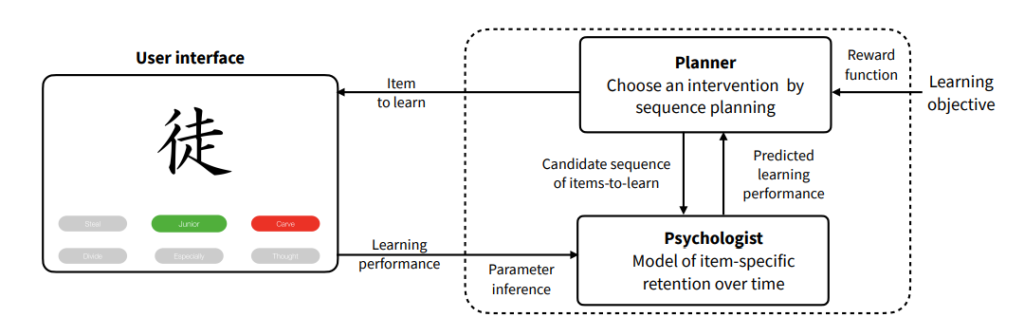

Improving Artificial Teachers by Considering how People Learn and Forget [Paper][Code][Data]

Nioche et al. IUI’21: Presents a computational model of learning and forgetting for adaptive tutoring systems. By modeling human memory decay, the artificial teacher optimizes lesson sequencing and feedback timing to improve user retention.

Conversations with GUIs (DIS ’21) [Paper][Data][Video]

Todi et al. CHI’21, Conversations with GUIs introduces a dataset capturing how users verbally describe and adjust graphical user interfaces using natural language. The dataset includes multimodal interaction logs, transcripts of spoken GUI modification commands, system responses, and resulting interface states. It contains recordings from controlled user studies where participants customized real application interfaces through speech, enabling analysis of natural-language-based GUI manipulation and interaction behavior.

Mouse sensor position optimization (CHI’20) [Paper][Video]

Kim et al. CHI’20: Optimal Sensor Position for a Computer Mouse provides an experimental dataset on how mouse sensor placement (front, center, rear) affects pointing performance. It includes movement trajectories, throughput, and error metrics from controlled pointing tasks, plus data from a virtual sensor prototype simulating repositioning.

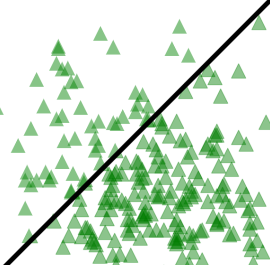

Fast Design Space Rendering of Scatterplots [Paper][Data]

Santala et al. EuroVis’20: Presents a computational visualization method for rapidly rendering scatterplot design spaces. Using OpenCV and dynamic sampling, the system allows designers to visually explore large numbers of scatterplot variations efficiently.

In: Kerren, Andreas; Garth, Christoph; Marai, G. Elisabeta (Ed.): EuroVis 2020 – Short Papers, The Eurographics Association, 2020, ISBN: 978-3-03868-106-9.

Enrico: A High-quality Dataset for Topic Modeling of Mobile UI Designs [Paper][Code&Data]

Leiva et al. MobileHCI’20: Publishes Enrico, a large, curated dataset of mobile UI screenshots and design metadata for topic modeling and interface analysis. The dataset enables large-scale research on mobile design patterns and visual clustering.

In: Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, 2020.

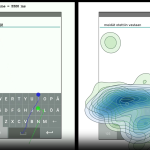

The How-WeType-Mobile dataset (CHI’20) [Paper]

Jiang et al. CHI’20: How We Type: Eye and Finger Movement Strategies in Mobile Typing provides a multimodal dataset of mobile typing behavior. It includes synchronized eye-tracking, finger motion (3D marker data), and key-press logs from a transcription task using one-finger and two-thumb typing. The data records inter-key intervals, gaze-finger coordination, error correction behavior, and typing speed across trials.

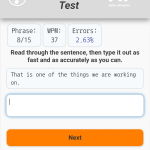

Typing37k dataset (MOBILEHCI’19) [Paper]

Palin et al. MobileHCI’19: How do People Type on Mobile Devices? introduces a massive mobile typing dataset collected via a browser-based transcription task with 37,370 participants. It records keystroke timings, error rates, participant demographics, device metadata, and usage of intelligent text entry techniques (e.g. autocorrect). The dataset supports CSV/SQL export, raw & processed tables, and includes code & analysis scripts for further research.

Rendering arbitrary force-displacement curves (UIST’18) [Paper]

Liao et al. CHI’20: The project provides measured physical button-press data for several button types (e.g. force-displacement curves including dynamic vibration/velocity-response). It supports a prototype (“Button Simulator”) that uses those measurements to reproduce tactile behaviour digitally or physically via a 3D-printed haptic device.

Surfing for Inspiration: Digital Inspirational Material in Design Practice [Paper][Survey]

Koch et al. DRS’18: Provides survey-based insights into how designers use digital sources for inspiration, highlighting common search habits, curation practices, and implications for creativity-support tools.

In: Design Research Society 2018 Catalyst (DRS2018), 2018.

Relating Experience Goals with Visual User Interface Design (IwC’18) [Paper]

Jokinen et al. IwC’18: Emotion in HCI introduces a multimodal dataset capturing users’ emotional responses during interactive tasks. The dataset includes synchronized facial video, physiological signals (heart rate, EDA), behavioral interaction logs, and self-reported emotion ratings on valence and arousal scales. Collected in controlled experiments across multiple interface contexts, the dataset enables research on emotion recognition, affective user modeling, and adaptive interfaces.

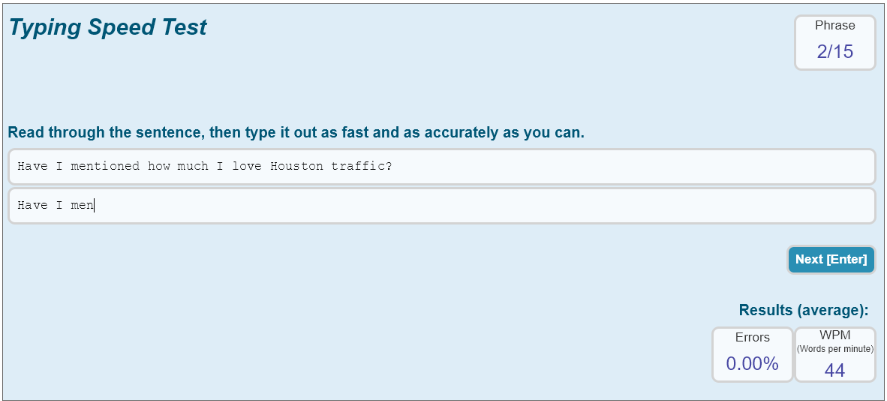

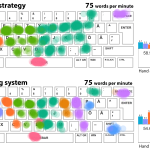

Observations on Typing from 136 Million Keystrokes (CHI’18) [Paper]

Dhakal et al. CHI’18: Observations on Typing from 136 Million Keystrokes is a large-scale typing dataset collected from an online typing-test service with ~168,000 participants. It contains raw keystroke-by-keystroke logs, timing metadata, and typing speed / error statistics; supports clustering of typist behaviors, analysis of rollover typing, and more.

Hand tracking: A hand manipulating an object (ECCV’16) [Paper][Data]

Sridhar et al. ECCV’16: Real-time Joint Tracking of a Hand Manipulating an Object from RGB-D Input offers a dataset of hand-object interaction captured with a single RGB-D camera. It includes annotated fingertip positions and object-corner coordinates across several sequences, for evaluating joint hand + object tracking under occlusion and motion.

The How-We-Type dataset (CHI’16) [Paper][Video]

Feit et al. CHI’16: How We Type: Movement Strategies and Performance in Everyday Typing introduces a multimodal typing dataset from a transcription task with self-taught and trained typists. It includes motion-capture marker trajectories (52 markers at 240 fps), keystroke logs with finger annotations, eye-tracking video/gaze points, and hand-video recordings. The dataset exceeds 300 GB and captures differences in typing strategies, speeds, hand movement, and gaze behavior.

Muscle coactivation clustering (ACM’15) [Paper]

Bachynskyi et al. TOCHI’15: Informing the Design of Novel Input Methods with Muscle Coactivation Clustering provides a biomechanical dataset clustering muscle-coactivation from a 3D pointing task. It includes per-frame biomechanical variables, performance measures, spatial/temporal motion data, and assigned cluster IDs for each movement sample. The data support analysis of movement fatigue, precision, and ergonomic patterns across muscle coactivation clusters.

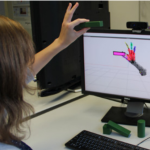

Investigating the Dexterity of Multi-Finger Input for Mid-Air Text Entry [Paper]

Sridhar et al. CHI’15: Presents a dataset and empirical study of mid-air multi-finger typing. Motion capture data and performance metrics quantify finger dexterity and coordination during gesture-based text input.

In: Proc. CHI’15, ACM 2015.

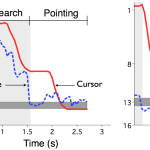

Menu selection data (CHI’14) [Paper]

Bailly et al. CHI’14: Model of Visual Search and Selection Time in Linear Menus defines a probabilistic model of gaze- and mouse-based menu selection in linear menus. It assumes users alternate between serial search and directed search strategies, plus a pointing movement sub-task, and predicts selection time as a probability distribution over gaze and cursor movements under five factors (menu length, menu organisation, target position, presence/absence, practice).