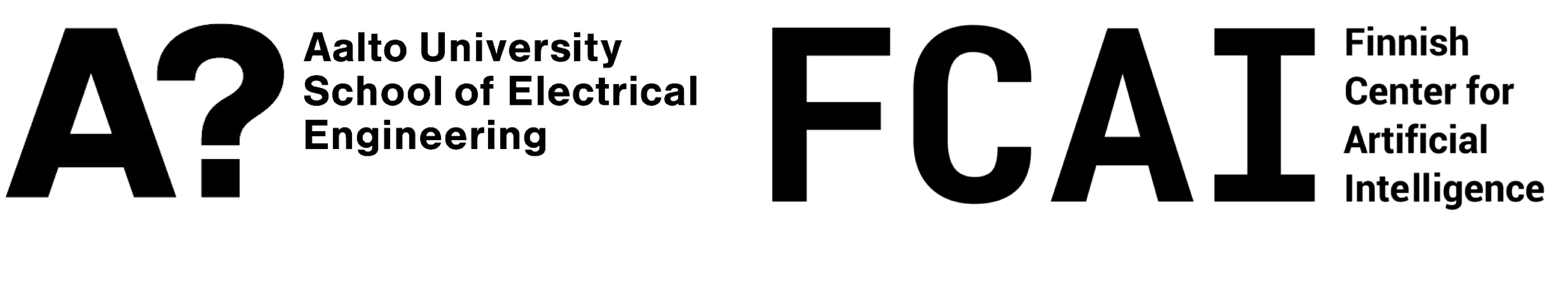

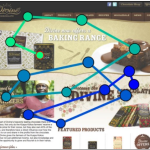

Chartist: Task-driven Eye Movement Control for Chart Reading [Paper][Code&Data]

Shi et al. CHI’25: Introduces a computational model of eye-movement control during chart reading. The model predicts fixations and saccades based on visual salience and task goals, simulating how users extract information from bar, line, and scatter plots.

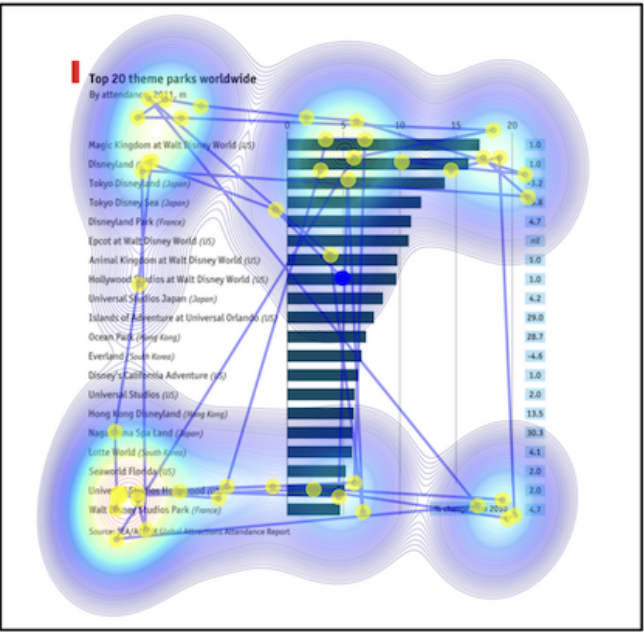

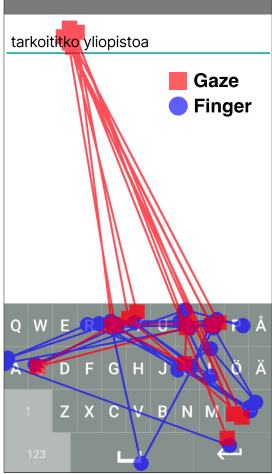

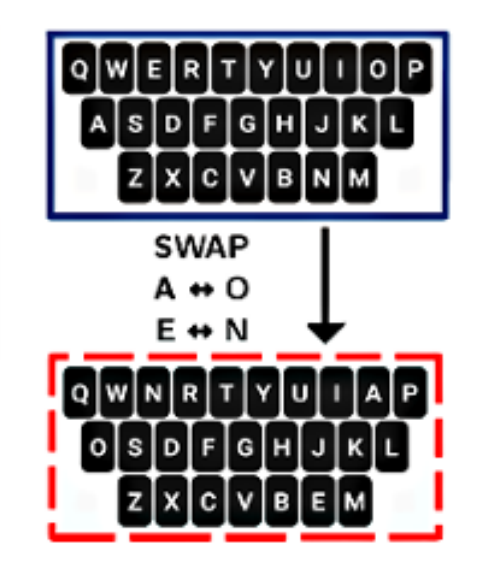

Simulating Errors in Touchscreen Typing [Paper][Code&Data]

Shi et al. CHI’25: Presents a generative model that simulates human touch-typing errors on mobile keyboards. The model reproduces spatial and temporal error patterns learned from empirical data, enabling evaluation of keyboard designs and autocorrection systems without user testing.

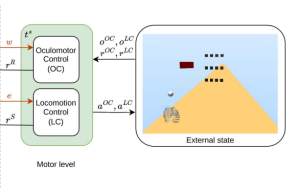

Pedestrian crossing decisions can be explained by bounded optimal decision-making under noisy visual perception [Paper]

Wang et al. present that pedestrian crossing decisions can be explained as boundly optimal choices made under noisy visual perception. Apparent decision biases emerge as rational adaptations to perceptual uncertainty rather than errors.

In: Transportation Research Part C: Emerging Technologies, vol. 171, pp. 104963, 2025, ISSN: 0968-090X.

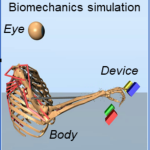

Real-time 3D Target Inference via Biomechanical Simulation [Paper][Code&Data]

Moon et al. CHI’24: Presents a biomechanical simulation model for real-time prediction of 3D movement targets. The model infers intended motion endpoints from partial kinematic data, improving interaction tracking in immersive systems.

Explaining crowdworker behaviour through computational rationality [Code&Data]

Hedderich & Oulasvirta, 2024: Introduces a computational-rationality model explaining how crowdworkers manage attention, effort, and reward in micro-task environments. Simulations replicate worker strategies and decision patterns in online platforms.

In: Behaviour & Information Technology, 2024.

CRTypist: Simulating Touchscreen Typing Behavior via Computational Rationality [Paper][Code&Data]

Shi et al. CHI’24: Develops a computational-rationality model simulating how people type on touchscreens, capturing trade-offs between speed and accuracy. The model enables prediction of individual typing behavior and optimization of layout designs.

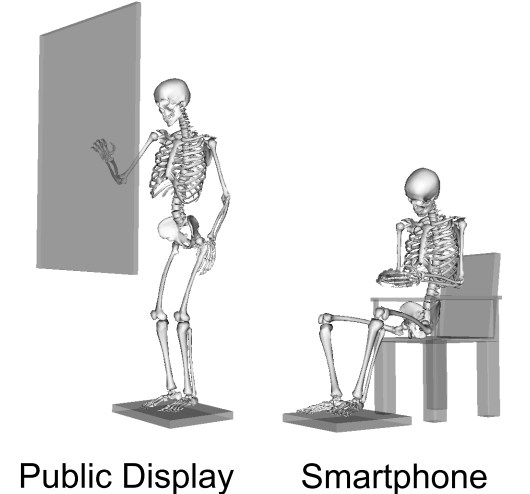

Heads-Up Multitasker: Simulating Attention Switching On Optical Head-Mounted Displays [Paper]

Bai et al. introduce a model that simulates how users switch attention between tasks like reading and walking on optical head-mounted displays. It explains multi-tasking behavior as optimal attention allocation balancing task performance and safety.

In: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 2024.

EyeFormer: Predicting Personalized Scanpaths with Transformer-Guided Reinforcement Learning [Paper]

Jiang et al. UIST’24: Proposes a Transformer-based model for predicting personalized eye scanpaths. The model learns user-specific gaze patterns via reinforcement learning, improving prediction for chart and GUI perception tasks.

Rediscovering Affordance: A Reinforcement Learning Perspective [Paper][Video]

Liao et al. CHI’22: Proposes a reinforcement learning model to rediscover and formalize the concept of affordances in interaction. The model learns mappings between user goals, actions, and system states, offering computational insight into how affordances emerge in adaptive interfaces.

User-in-the-box: Biomechanical, perceptually controlled user models (UIST’22) [Paper][Video]

Ikkala et al. Introduces biomechanical, perceptually controlled user models that simulate realistic motor behavior in interactive tasks. Muscle-actuated agents in MuJoCo, linked with perceptual modules and RL policies, reproduce human motion patterns such as Fitts’ Law. The modular framework separates biomechanics, , and task design for flexible HCI simulation.

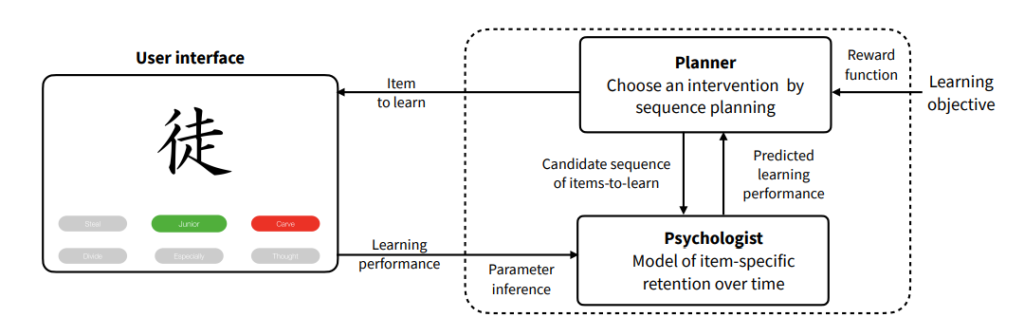

Improving Artificial Teachers by Considering how People Learn and Forget [Paper][Code&Data]

Nioche et al. IUI’21: Presents a computational model of learning and forgetting for adaptive tutoring systems. By modeling human memory decay, the artificial teacher optimizes lesson sequencing and feedback timing to improve user retention.

Touchscreen Typing as Optimal Supervisory Control [Paper][Code][Data]

Jokinen et al. CHI’21: Develops a control-theoretic model of touchscreen typing, framing typing as an optimal supervisory control process. The model predicts individual trade-offs between speed, accuracy, and effort, validated with behavioral data.

Mobile QoE prediction in the field [Paper]

Boz et al. 2019: Develops a predictive model for mobile Quality of Experience (QoE) using real-world field data. The model combines network metrics, device context, and user feedback to estimate perceived mobile app performance.

May AI?: Design Ideation with Cooperative Contextual Bandits [Paper][Code&Data]

Koch et al. CHI’19: Introduces a cooperative contextual bandit algorithm for AI-assisted design ideation. Designers and AI agents collaboratively explore the design space, with the AI adapting suggestions based on user feedback and exploration patterns.

In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 633, ACM 2019.

Neuromechanics of a button press [Paper]

Oulasvirta et al. (CHI’18) propose a neuromechanical model of button pressing that simulates finger movement through muscle, joint, and neural dynamics. It predicts force, timing, and success variability, linking motor neuroscience with HCI to explain adaptive finger movement.

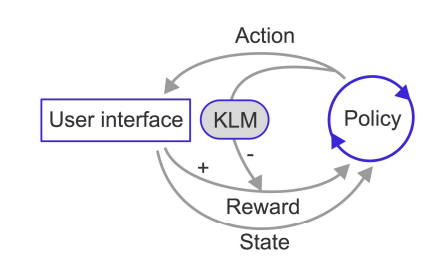

RL-KLM: Automating Keystroke-level Modeling with Reinforcement Learning (IUI’19) [Paper]

Leino et al. IUI’19: RL-KLM defines the Keystroke-Level Model as a Markov Decision Process and applies Reinforcement Learning to automate the sequence of KLM operators. Each keystroke or pointing action is treated as an action, with rewards based on operator time costs. The learned policy minimizes task completion time, replacing manual operator specification for UI evaluation.

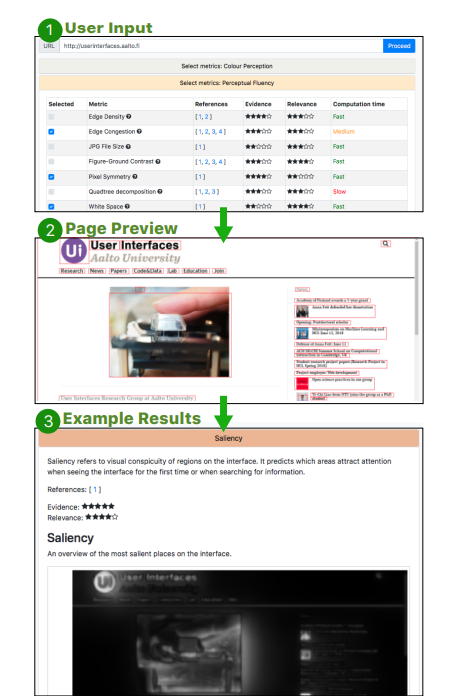

Aalto Interface Metrics (AIM): A Service and Codebase for Computational GUI Evaluation [Paper][Data]

Koch et al. DRS’18: Provides a survey-based dataset on how designers use digital media for creative inspiration. The data capture design workflows, sources, and practices that inform computational creativity tools.

In: The 31st Annual ACM Symposium on User Interface Software and Technology Adjunct Proceedings, pp. 16–19, ACM 2018.

Modeling learning of new keyboard layouts (CHI ’17)

Jokinen et al. CHI’17 proposes a cognitive model of visual search during keyboard learning, combining memory-based and visual strategies. The model simulates how users transition from visual short-term memory to recall-based search as they gain familiarity. It predicts key-finding times and error rates across learning stages, offering a principled account of layout acquisition.

Control Theoretic Models of Pointing [Paper]

Müller et al. (TOCHI’17) introduce Control-Theoretic Models of Pointing, framing cursor motion as a feedback process linking perception and action. Models like Crossover, Surge, and Bang-Bang fit human data, capturing pointing dynamics beyond Fitts’ Law.

Performance and Ergonomics of Touch Surfaces: A Comparative Study using Biomechanical Simulation [Paper]

Bachynskyi et al. CHI’15: Develops biomechanical simulation models to compare touch-surface ergonomics. The models predict user muscle strain, efficiency, and comfort across input devices, supporting ergonomic interface design.

In: Proc. CHI’15, pp. 1817–1826, ACM 2015.